Google’s Project Tango has been shaping up quite quickly since we got our first look at the programme. While we are not close to a retail product yet, the latest report from the Wall Street Journal on Tango, suggests the second device would be a small form factor tablet with an advanced 3D imaging camera. Google is reportedly producing 4,000 prototypes of this tablet next month. But what’s so special about a tablet or a software platform that can capture 3D images of objects? That’s just scratching the surface of course; Project Tango is more than just a 3D camera platform. Let’s find out what it’s all about.

What is it?

“What if you could capture the dimensions of your home simply by walking around with your phone before you went furniture shopping? What if directions to a new location didn’t stop at the street address? What if you never again found yourself lost in a new building?”

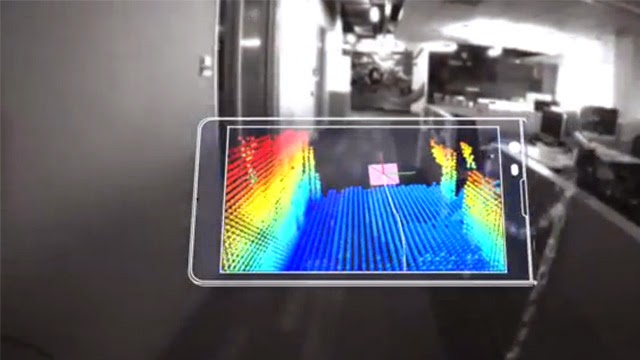

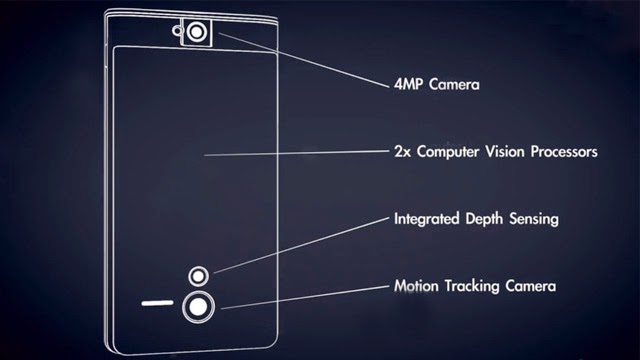

That’s how Google described Project Tango in its introduction. All of this is possible thanks to 3D depth sensing and motion detection. Project Tango devices can measure depth data, tell how far objects are in a room, if they are moving closer or stationary and how much space they occupy. It does it all in real-time thanks to a custom developed Movidius Myriad 1 vision processor, which is a one-of-its-kind low-powered companion chip, sort of like Apple M7 chipset.

Google’s Advanced Technology and Projects group is the force behind Project Tango, just like the Ara modular phone project. All Project Tango devices at first are believed to have two back cameras along with a bevy of infrared and silent depth sensors.

So how does it work?

The real magic is in software. It’s basically like packing a Kinect into a smartphone (literally). The software allows the cameras to lay a grid of dots on objects and depending on how the dots are placed, the device identifies objects in the room. An iFixit teardown of the first Tango prototype smartphone revealed this about the algorithm behind the software: “The algorithm cross-correlates groups of dots from the captured image of the projected dots with the pattern stored. Since the pattern is pseudo-random, each group of dots will have a unique fingerprint. Depth/distance is then calculated from the deviation in position of each dot in the sub-group related to its position in the stored pattern.”

Built for Android

Just like Project Ara, Tango is also built for Android phones and tablets. The introduction video clearly shows an Android device as the base. The Project Tango has been available for developers to play around and build apps related to indoor navigation, real world and augmented reality gaming, and new algorithms for processing sensor data. Google will let developers tap in to Project Tango via APIs to let them integrate the hardware and software capabilities into their image-capture apps, games, navigation systems, mapping applications etc. It’s as yet unclear whether Google intends to launch a separate Project Tango app storefront. Since the first development toolkit is already out, app makers already have an idea of what Project Tango is capable of.

Who is it for?

Project Tango is all about interacting with real world objects. So its applications range from personal safety, to navigation, to public spaces. For example, combining Project Tango with haptic feedback, you could help visually impaired people navigate a room without any other aid. Or it could be something like your Android phone alerting you on spotting an object in a store which it knows you have searched for in the past. Perhaps you may not want to buy it anymore, but Google wants to nudge you nonetheless.

The biggest advantage that Google has is that it’s building Project Tango for mobile devices, which allows it to tap into pretty much every real-world place, since smartphones travel with their users everywhere, so the possibilities for apps are greatly enhanced, as opposed to a game console or a PC. Google has taken big strides in contextual computing already with software offerings such as Google Now and predictive search. By combining it with computer vision, it can expand the horizons of contextual computing even further, and make it seem more relevant in everyday life.

![Samsung Galaxy M15 Stock Wallpapers [Full HD+] are Available for Download Samsung Galaxy M15 Stock Wallpapers [Full HD+] are Available for Download](https://www.techfoogle.com/wp-content/uploads/2024/04/Samsung-Galaxy-M15-Stock-Wallpapers-Full-HD-are-Available-for-Download-100x70.jpg)